|

I am a Research Scientist at Google, working on the Veo project. Currently, I am focusing on real-time, interactive, high-resolution video generation. At Google, I am fortunate to work under the supervision of Peyman Milanfar. Prior to Google, I was training diffusion-autoregressive model Hunyuan-image 3.0 as a Research Scientist in Tencent. My research lies in Multimodal Generative AI, especially in image and Video Synthesis. I am interested in building AI systems that can simulate our dynamic visual world with creative control, and bring real-time interactive experiences to human beings. I received my Ph.D. from the Hong Kong University of Science and Technology, supervised by Prof. Qifeng Chen. In 2020, I received my Bachelor's Degree in Automation from Zhejiang University with a National Scholarship from Chu Kochen Honors College, supervised by Prof. Wenyuan Xu. Our team is recruiting talented interns on image and video generation!Drop me an Email if you are interested in collaboration or internships! Email / CV / Google Scholar / Github |

|

|

|

|

|

|

2026 - Present I am working on the Veo project, a high-resolution video generation model. |

|

2024 - 2025 In diffusion-autoregressive model Hunyuan-image 3.0, I am responsible for semantic encoder, text-to-image pre-training and Identity-preserving instruction editing. |

|

I am fortunate to collaborate with talented students and researchers around the world. |

|

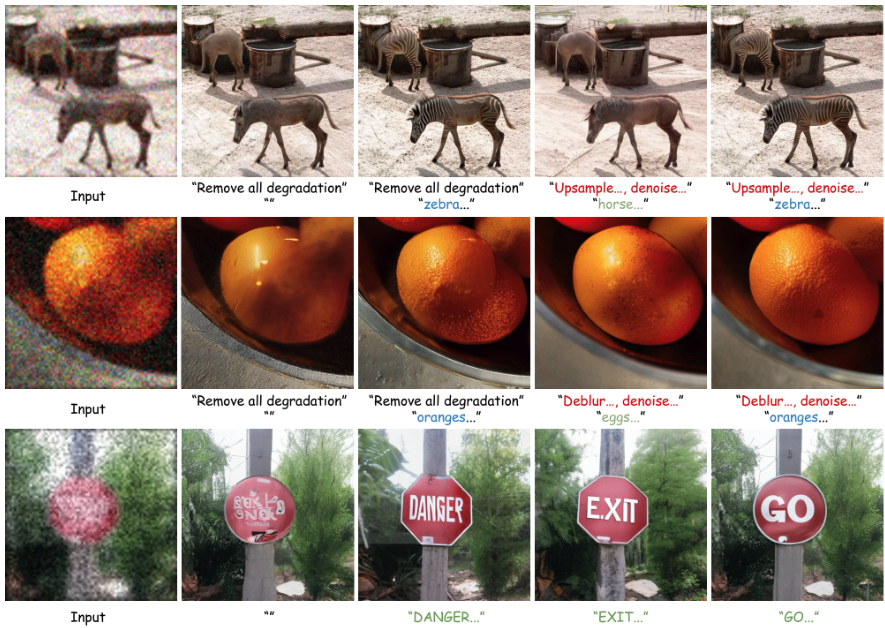

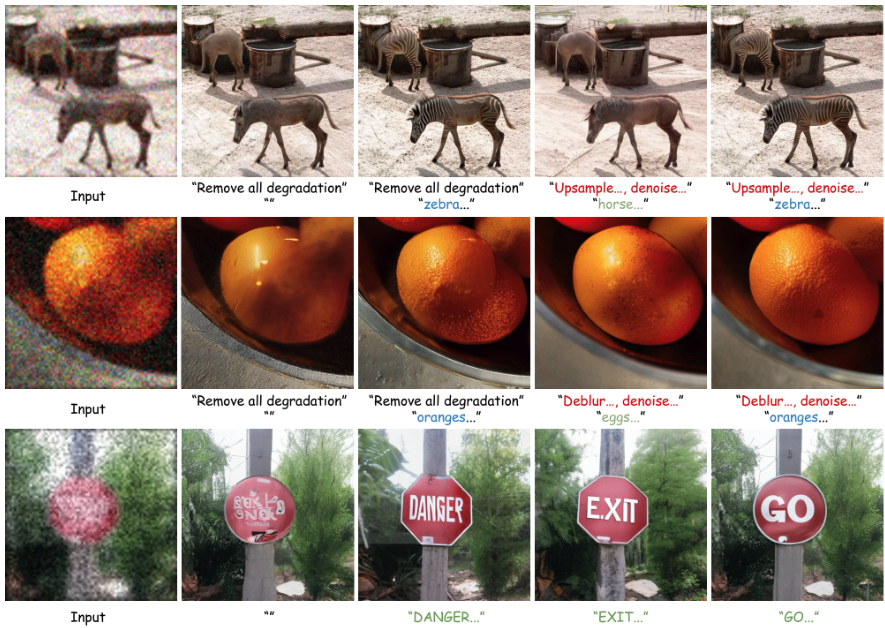

CVPR, 2025 |

|

ECCV, 2024 project page |

|

|

Chenyang Qi, Xiaodong Cun , Yong Zhang, Chenyang Lei, Xintao Wang , Ying Shan, Qifeng Chen ICCV Oral, 2023 arxiv / code / project page Editing your video via pretrained Stable Diffusion model without training.

|

|

Follow-Your-Click: Open-domain Regional Image Animation via Short Prompts

Yue Ma*, Yingqing He*, Hongfa Wang, Andong Wang, Chenyang Qi, Chengfei Cai, Xiu Li, Zhifeng Li, Heung-Yeung Shum, Wei Liu, Qifeng Chen, arXiv, 2024 Project page / arXiv / Github

|

|

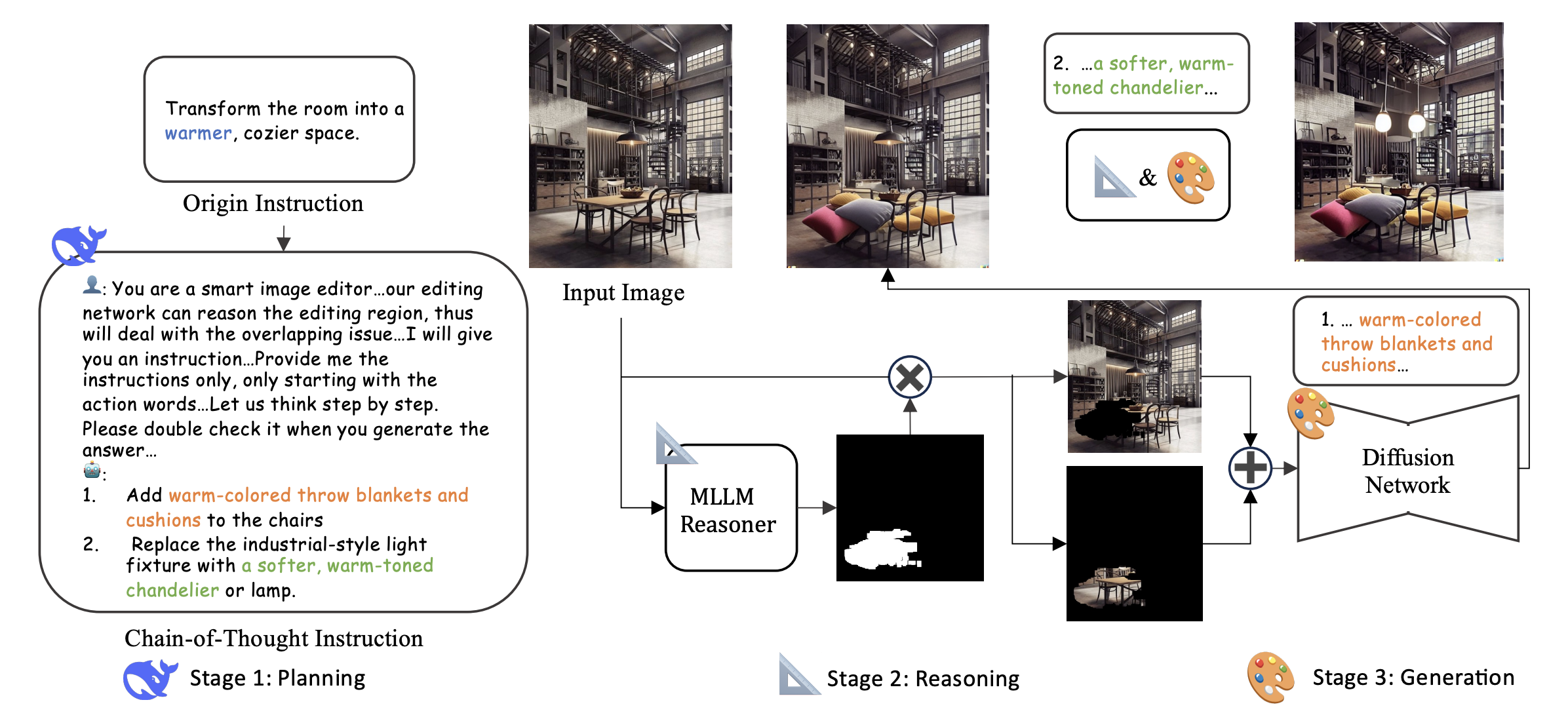

Jiwen Yu, Xiaodong Cun , Chenyang Qi , Yong Zhang, Xintao Wang, Ying Shan, Jian Zhang

ArXiv, 2023

|

|

Yue Ma, Xiaodong Cun, Yingqing He, Chenyang Qi, Xintao Wang, Ying Shan, Xiu Li , Qifeng Chen

ArXiv, 2023

|

|

Ge Yuan, Xiaodong Cun, Yong Zhang, Maomao Li, Chenyang Qi, Xintao Wang, Ying Shan, Huicheng Zheng NeurIPS, 2023 project page / code Face identity customization in diffusion model |

|

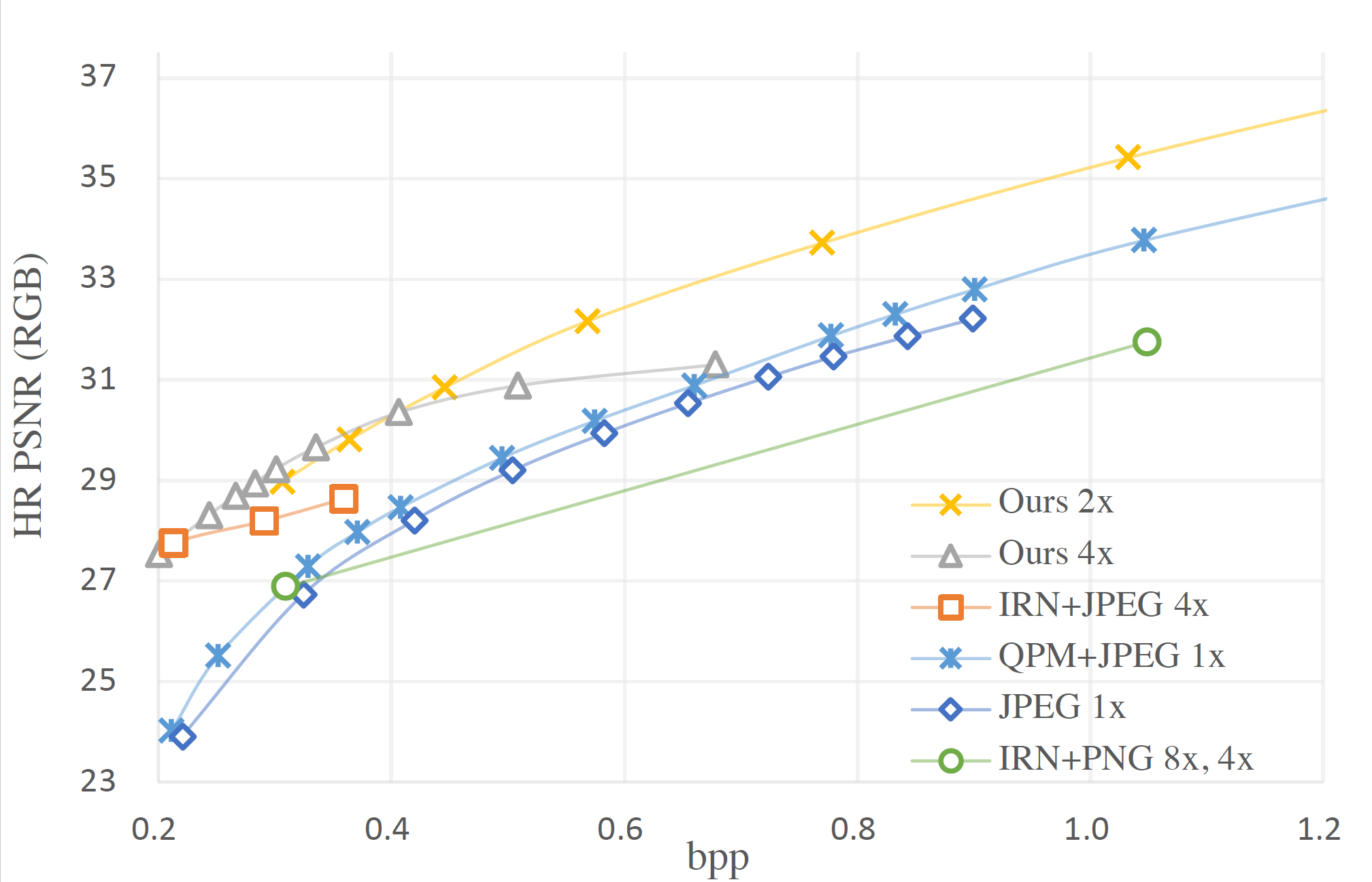

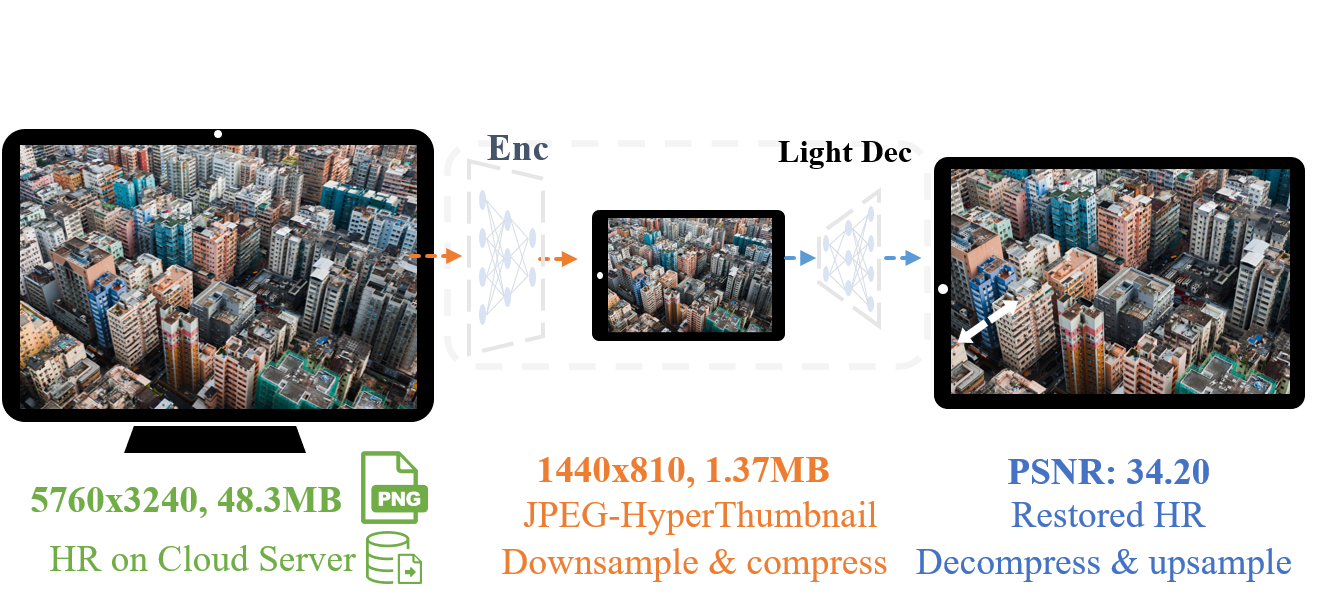

Chenyang Qi,* Xin Yang*, Ka Leong Cheng, Ying-Cong Chen, Qifeng Chen CVPR, 2023 arxiv / code Image upscaling with learnable frequency-domain quantization to achieve 6K real-time speed and best rate-distortion. |

|

|

Bowen Zhang*, Chenyang Qi*, Pan Zhang, Bo Zhang, HsiangTao Wu, Dong Chen, Qifeng Chen, Yong Wang, Fang Wen CVPR, 2023 arxiv / code / project page Identity-preserving talking head generation utilizing dense landmarks and

spatial-temporal enhancement with GAN priors.

|

|

|

Chenyang Qi*, Junming Chen*, Xin Yang, Qifeng Chen ACM Multimedia, 2022 arxiv / code / project page An extremely efficient (700X speedup) buffer-based framework for online video denoising. |

|

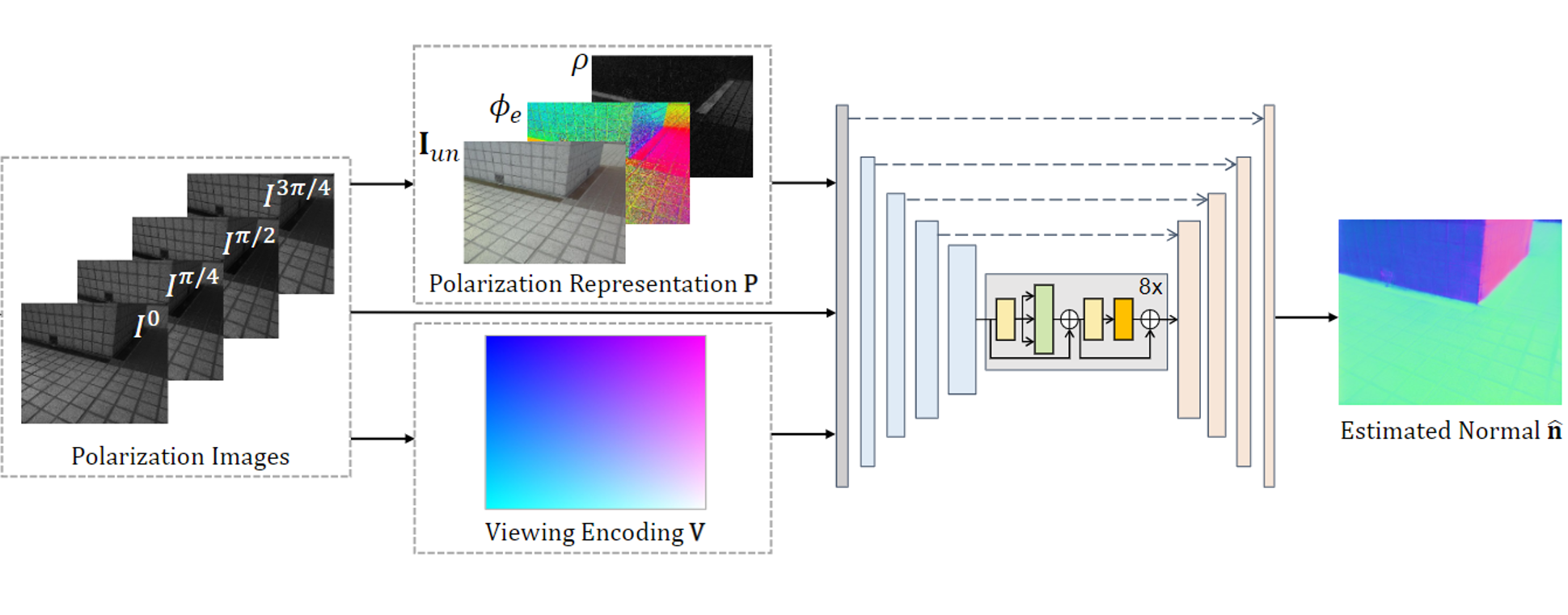

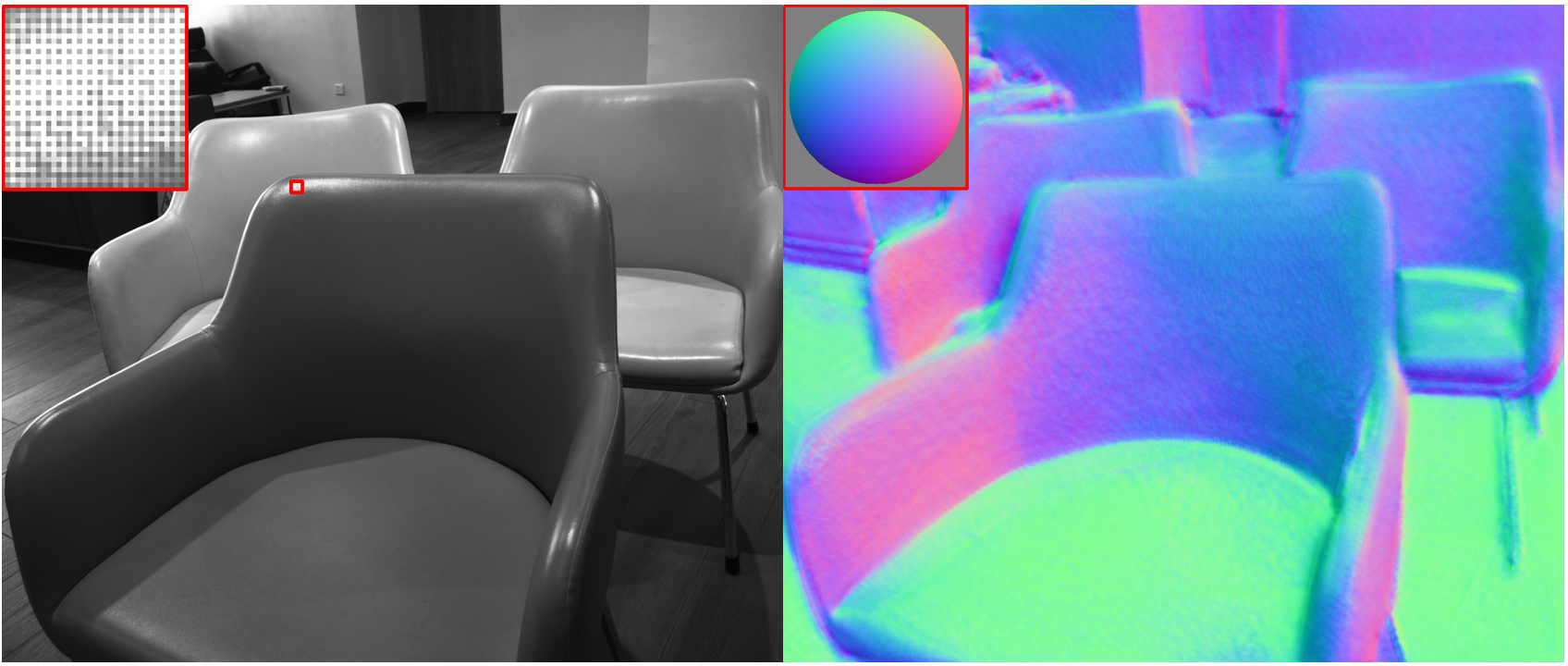

Chenyang Lei*, Chenyang Qi*, Jiaxin Xie*, Na Fan, Vladlen Koltun , Qifeng Chen CVPR, 2022 arxiv / code / project page Scene-level normal estimation from a single polarization image using physics-based priors. |

|

|

|

Jan, 2024 - April, 2024 with Taesung Park, Jimei Yang and Eli Shechtman |

|

July, 2023 - November, 2023 with Zhengzhong Tu, Keren Ye, Mauricio Delbracio, Hossein Talebi and Peyman Milanfar |

|

Jan, 2023 - June, 2023 with Xiaodong Cun, Yong Zhang, Xintao Wang, and Ying Shan |

|

June, 2022 - December, 2022 with Bo Zhang, Dong Chen, and Fang Wen |

|

|

|

Thanks Dr. Jon Barron for sharing the source code of his personal page. |